Sieve and Intelligence: LLMs as Extractors of Metaphorical Entity Information Embedded in Data for Intelligence Generation

Abstract

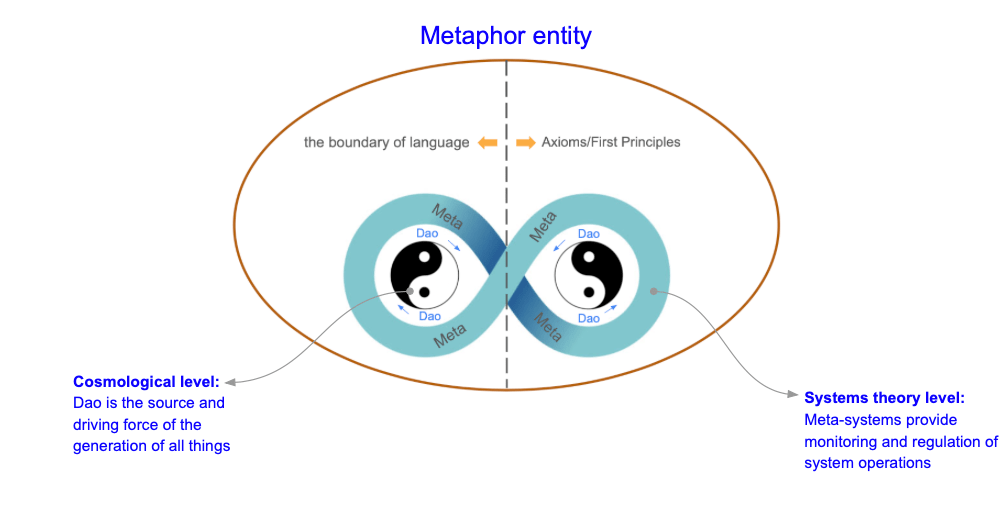

This paper proposes a novel conceptual framework that views Large Language Models (LLMs) as linguistic sieves that systematically filter and extract metaphorical entity information embedded within vast textual data. Based on Artificial Intelligence Semiotics Ecology theory, we argue that LLMs do not merely memorize or interpolate data, but function as dynamic sieves that extract useful metaphorical entity information structures, subsequently generating content when solving new tasks. This perspective combines cognitive linguistics, information theory, and AI architecture to provide a fresh perspective on understanding how language models transform metaphorical entity information into active problem-solving intelligence.

Keywords

Information parasitism; linguistic sieve; LLM; generative AI; cognitive linguistics; artificial intelligence semiotics ecology.

1. Introduction

The rapid development of Large Language Models (LLMs) has fundamentally transformed the fields of natural language processing and artificial intelligence. However, the fundamental mechanisms by which these models convert massive textual data into actionable knowledge still lack in-depth theoretical exploration. Meanwhile, the concept of artificial intelligence semiotics ecology indicates that certain metaphorical entity information “epiphytically/parasitically/symbiotically" exists within other host content, requiring extraction, interpretation, and reorganization to become usable knowledge. This paper proposes that LLMs can be viewed as linguistic sieves that systematically filter data, extract these metaphorical entity information units, and recombine them to generate outputs when facing new tasks.

2. Literature Review

2.1 Information Parasitism

According to epistemology, media theory (Parikka, 2007; Serres, 1982), and parasite theory, information parasitism refers to information existing parasitically within host structures, requiring extraction and interpretation, combined with other units, to become actionable knowledge.

2.1.1 Media Theory

Media Theory is a discipline that explores:

- How media influence human perception, social structures, and knowledge production.

- It analyzes not only content (message) but focuses more on the form, materiality, and infrastructure of the communication medium itself.

2.1.2 Parikka (2007): Jussi Parikka’s “Digital Contagions"

Core Perspective

- Viewing computer viruses as “media parasites"

Parikka combines media theory with biological metaphors, proposing:

- Viruses, malware, and hacker attacks are “information life forms parasitic on computational infrastructure"in modern digital ecology.

- They are not merely technical problems but reveal our cultural fears about information control, power structures, and technological boundaries.

2.1.3 Serres (1982): Michel Serres’ “The Parasite"

- “The parasite" is the archetypal structure of communication

Serres proposes in his book:

- All communication, energy transmission, and information flow contain “parasitic" relationships: ➔ Parasites in food chains obtain energy from hosts ➔ Social parasites (beggars, thieves, spies) extract benefits from systems ➔ Language is also parasitic: noise is both interference and a source of information innovation

- Noise/parasitism is the driving force of system evolution

Through parasitic interference, systems can reorganize and create new order and structure.

2.1.4 Integration: Parikka and Serres’ Position in Media Theory

According to epistemology and media theory (Parikka, 2007; Serres, 1982), information parasitism believes that information exists parasitically within host structures, requiring extraction and interpretation, combined with other units, to become actionable knowledge.

2.2 Sieve Methods in Conceptual Analogy: DOE Parameter Design and LLM Hyperparameter Tuning

In mathematics, sieve methods (such as the Sieve of Eratosthenes) find target subsets through systematic elimination (Apostol, 1976), representing a structured elimination method.

2.2.1 Engineering Field: Taguchi’s Parameter Design

Taguchi’s parameter design uses orthogonal arrays to screen optimal parameter combinations to optimize system robustness (Taguchi, 1986).

In statistical quality engineering, screening (DOE Parameter Design) means finding parameter combinations that make system performance most robust.

Genichi Taguchi’s Parameter Design is essentially a “statistical sieve“:

- First design an experimental matrix (Orthogonal Array), like designing sieve rules in number sequences;

- Gradually test combinations, “sieving out" noise factors that affect performance or quality layer by layer;

- What remains is parameter combinations that maintain high performance while being less susceptible to external changes.

This is not just an optimization method, but a philosophy of robust design.

2.2.2 LLM Architecture and Token Screening

LLMs selectively strengthen meaningful co-occurrences and language patterns through tokenization, attention mechanisms, and backpropagation weight updates (Vaswani et al., 2017; Brown et al., 2020). Screening (Hyperparameter Tuning) is an indispensable key step in training LLM models.

AI Hyperparameter Tuning: Neural Network Sieve Methods

In AI and deep learning fields, the concept of sieving is applied on a much larger scale.

- We face vast model spaces: Learning rate, Batch size, Optimizer type, Layer depth… Each parameter could change the model’s fate.

- Grid Search is like using a complete sieve, exhaustively searching all possibilities;

- Random Search is like randomly throwing stones to find optimal depth;

- Bayesian Optimization is an intelligent sieve method, predicting the next most likely optimal solution based on known results.

Just like mathematical sieve methods, the essence of AI model parameter tuning is “removing ineffective parameter combinations, keeping only the best paths".

3. Conceptual Framework: LLMs as Linguistic Sieves

In product design, Taguchi teaches us to balance variation minimization and control factor optimization. In AI model training, Transformers teach us to use massive parameters and intelligent sieve methods to find the most stable language understanding and generation paths.

When we move from DOE to LLMs, from prime number sieve methods to hyperparameter sieve methods, we see that from mathematics to engineering to intelligence, sieves

Perhaps this is the starting point of “intelligence": removing the superfluous to preserve the essential, allowing all things to reveal their true nature.

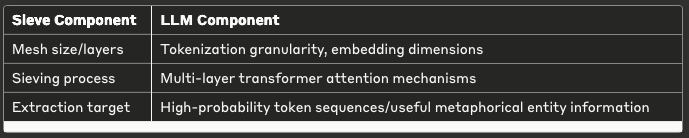

3.1 Sieve Method Analogy

We propose the following analogy:

Sieve ComponentLLM ComponentMesh size/layersTokenization granularity, embedding dimensionsSieving processMulti-layer transformer attention mechanismsExtraction targetHigh-probability token sequences/useful metaphorical entity information

3.2 Information Epiphytic/Parasitic/Symbiotic in Data

Artificial Intelligence Semiotics Ecology theory indicates that useful knowledge is epiphytic/parasitic/symbiotic within vast, irrelevant, or neutral textual contexts. The LLM training process serves as a filtering mechanism:

- Remove noise (irrelevant tokens)

- Preserve metaphorical entity information (recurring and context-dependent token patterns)

- Recombine extracted units to perform generative tasks (translation, summarization, reasoning)

4. Implications for Generative Intelligence

Viewing LLMs as linguistic sieves brings the following insights:

- Generative outputs are not purely original, but reconfiguration of metaphorical entity information units screened during pre-training.

- Fine-tuning is like refining mesh size, optimizing the screening process for specific tasks.

- Prompt engineering is dynamically adjusting sieve configuration, guiding the model to extract what kinds of metaphorical entity information units to generate responses.

5. Conclusion

The framework proposed in this paper combines Artificial Intelligence Semiotics Ecology theory with LLM architecture, redefining large-scale AI as systems that achieve problem-solving intelligence through screening linguistic epiphytic/parasitic/symbiotic units. Future research can combine epiphytic/parasitic/symbiotic-host ecological modeling with attention mechanisms to enhance the interpretability and controllability of AI generation processes.

篩與智 : LLM 作為提取存於資料中隱喻實體資訊以生成智能

摘要

本文提出一種嶄新的概念框架,將大型語言模型(LLMs)視為語言篩,系統性地篩選並提取嵌入於龐大文本資料中的隱喻實體資訊。基於人工智慧符號生態學理論,我們認為,LLM 並非單純記憶或插值資料,而是作為動態的篩子,萃取有用的隱喻實體資訊結構,進而在解決新任務時生成內容。此觀點結合認知語言學、資訊理論與AI架構,為理解語言模型如何將隱喻實體資訊轉化為主動的問題解決智能,提供全新視角。

關鍵詞

資訊寄生;語言篩;LLM;生成式AI;認知語言學;人工智慧符號生態學。

1. 引言

大型語言模型(LLMs)的迅速發展,徹底改變了自然語言處理與人工智慧領域。然而,這些模型如何將海量文本資料轉化為可行知識,其根本機制尚缺乏深入理論探討。另一方面,人工智慧符號生態學概念指出,某些隱喻實體資訊“附生/寄生/共生”於其他宿主內容中,需透過提取、解讀與重組,才能成為可用的知識。 本文提出,LLMs 可被視為語言篩,系統性地過濾資料,萃取這些隱喻實體資訊單元,並在面對新任務時重新組合生成輸出

2. 文獻回顧

2.1 資訊寄生

根據認識論,媒介理論(Parikka, 2007;Serres, 1982),與寄生者理論,資訊寄生為資訊以寄生方式存在於宿主結構內,需透過提取與詮釋,結合其他單元後,方能成為可行知識。

2.1.1 媒介理論(Media Theory)

媒介理論是一門探討:

- 媒介(Medium / Media)如何影響人類感知、社會結構與知識生產的學科。

- 不僅分析內容(message),更關注傳播載體本身的形式、物質性與基礎結構。

2.1.2 Parikka (2007): Jussi Parikka《數位傳染 (Digital Contagions)》

核心觀點

- 將電腦病毒視為“媒介寄生物”

Parikka 結合媒介理論與生物學隱喻,提出:

- 病毒、惡意軟體、黑客攻擊等,是現代數位生態中**“寄生在運算基礎設施之上的信息生命體”**。

- 它們不僅是技術問題,也揭示了我們對資訊控制、權力結構與技術邊界的文化恐懼。

2.1.3 Serres (1982): Michel Serres《寄生者 (The Parasite)》

- “寄生者”是溝通的原型結構

Serres 在書中提出:

- 一切交流、能量傳輸與資訊流動,都包含“寄生”關係:

➔ 食物鏈中的寄生者從宿主身上獲取能量

➔ 社會中的寄生者(乞丐、小偷、間諜)從系統中取利

➔ 語言也是寄生:噪音 (noise) 既是干擾,也是信息創新的來源

- 噪音/寄生是系統進化的動力

透過寄生干擾,系統得以重組,創造新的秩序與結構。

2.1.4 統合:Parikka 與 Serres 在媒介理論中的地位

根據認識論與媒介理論(Parikka, 2007;Serres, 1982),資訊寄生認為資訊以寄生方式存在於宿主結構內,需透過提取與詮釋,結合其他單元後,方能成為可行知識。

2.2 篩法在概念類比DOE參數設計與LLM超參數調整

在數學中,篩法(如埃拉托斯特尼篩法)透過系統性排除,找出目標子集(Apostol, 1976),是一種結構化剔除法。

2.2.1 工程領域:田口的參數設計則利用正交表篩選最佳參數組合以優化系統穩健性(Taguchi, 1986)

在統計品質工程裡,篩選(DOE Parameter Design)則是找到能讓系統表現最穩健的參數組合,

田口玄一(Genichi Taguchi)發明的參數設計(Parameter Design),本質上就是一個「統計篩子」:

- 先設計一個實驗矩陣(Orthogonal Array),

就像在數列裡設計篩法的規則一樣;

- 逐步測試各組合,

將影響性能或品質的噪音因子一層層“篩掉”;

- 最後留下的,就是既能維持高性能又不易受外界變化影響的參數組合。

這不只是一種最佳化方法,更是一種穩健設計的哲學。

2.2.2 LLM 架構與 tokens 篩選

LLM 透過分詞(tokenization)、注意力機制與反向傳播更新權重,選擇性強化有意義的共現與語言模式(Vaswani et al., 2017;Brown et al., 2020),篩選(Hyperparameter Tuning)為訓練LLM模型不可或缺的關鍵步驟。

AI 超參數調整:神經網路的篩法

來到AI與深度學習領域,篩的概念被更大規模地應用。

- 我們面對的是龐大的模型空間:

Learning rate, Batch size, Optimizer type, Layer depth…

每個參數都可能改變模型命運。

- Grid Search 就像使用了完整篩子,窮舉所有可能;

- Random Search 則像隨機丟石子找出最佳水深;

- Bayesian Optimization 則是智慧篩法,根據已知結果預測下一個最可能的最佳解。

就如同數學篩法一樣,AI模型調參的本質也是在「去除無效參數組合,只留下最佳路徑」。

3. 概念框架:LLM 作為語言篩

在產品設計裡,田口教我們把握變異最小化與控制因子最適化的平衡。在AI模型訓練裡,Transformer教我們用巨量參數與智慧篩法找到最穩定的語言理解與生成路徑。

當我們從DOE走到LLM,從質數篩法走到超參數篩法,我們看到的從數學到工程再到智能,篩子

也許,這就是“智”的起點:去蕪存菁,萬物得以顯現其本質。

3.1 篩法類比

我們提出以下類比:

篩子組件 LLM 組件

網孔大小 / 層次 分詞細度、embedding 維度

篩選過程 多層 transformer 注意力機制

被提取目標 高機率 tokens 序列 / 有用隱喻實體資訊

3.2 資料中的資訊附生/寄生/共生

人工智慧符號生態學理論指出,有用知識附生/寄生/共生於龐大、不相關或中性的文本脈絡之中。LLM 的訓練過程作為一種篩選:

- 去除噪音(無關 tokens)

- 保留隱喻實體資訊(重現且上下文依賴的 token patterns)

- 重新組合提取單元,以執行生成任務(翻譯、摘要、推理)

4. 對生成智能的啟示

將 LLM 視為語言篩帶來以下啟示:

- 生成輸出並非純粹原創,而是在預訓練過程中篩選出的隱喻實體資訊單元的再配置。

- 微調(fine-tuning)就像精細化網孔,針對特定任務最佳化篩選過程。

- Prompt engineering 則是動態調整篩子配置,引導模型提取何種隱喻實體資訊單元以生成回應。

5. 結論

本文提出的框架,將人工智慧符號生態學理論與 LLM 架構結合,重新定義大型 AI 為通過篩選語言附生/寄生/共生單元來實現問題解決智能的系統。未來研究可結合附生/寄生/共生-宿主生態建模與注意力機制,增強 AI 生成過程的可解釋性與可控性。

Reference:

- Apostol, T. M. (1976). Introduction to Analytic Number Theory. Springer.

- Brown, T. et al. (2020). Language Models are Few-Shot Learners. Advances in Neural Information Processing Systems, 33, 1877–1901.

- Parikka, J. (2007). Digital Contagions: A Media Archaeology of Computer Viruses. Peter Lang.

- Serres, M. (1982). The Parasite. University of Minnesota Press.

- Taguchi, G. (1986). Introduction to Quality Engineering: Designing Quality into Products and Processes. Asian Productivity Organization.

- Vaswani, A. et al. (2017). Attention is All You Need. Advances in Neural Information Processing Systems, 30, 5998–6008.

發表留言